Virality Project (US): Marketing meets Misinformation

Virality Project (US): Marketing meets Misinformation

Pseudoscience and government conspiracy theories swirl on social media, though most of them stay largely confined to niche communities. In the case of COVID-19, however, a combination of anger at what some see as overly restrictive government policies, conflicting information about treatments and disease spread, and anxiety about the future has many people searching for facts...and finding misinformation. This dynamic creates an opportunity for determined people and skilled marketers to fill the void - to create content and produce messages designed to be shared widely. One recent example was a video called “Plandemic”, a slickly-produced video in which a purported whistleblower mixed health misinformation about COVID-19 into a broader conspiratorial tale of profiteering and cover-ups. Much like the disease it purports to explain, the video traveled rapidly across international boundaries in a matter of days.

Plandemic was one step in a larger process to raise the profile of its subject. SIO had begun to observe an increasing number of posts about Plandemic’s subject, Judy Mikovits, beginning on April 16. For two and a half weeks, we observed a series of cross-platform moments in which Judy Mikovits – a scientist whose work was retracted by journal editors – was recast as an expert whistleblower exposing a vast government cover-up. While it was the “Plandemic” video that propelled her to global notoriety, weeks of planned activity led to its rapid virality.

We analyzed 41,662 posts on Facebook, Instagram, YouTube, and Twitter starting April 15, when anti-vaccine and natural health Facebook pages began to promote Mikovits and her new book. While she had been an anti-vaccine conference speaker for years, social media dynamics suggested that Mikovits’s narratives were now being marketed for far larger mainstream audiences. While most of the early content related to Mikovits stayed within these echo chambers, a well-oiled PR machine propelled the discussion of her claims into larger communities like MAGA and QAnon, which eventually eclipsed the anti-vaccine and natural health communities in sheer volume of posts.

The "Plandemic" trailer was released on May 4th. We gathered data until May 17; by that point, news media and fact-checking organizations had addressed the misinformation for U.S. audiences, but it had begun to spread internationally. In this post, we discuss not only the community dynamics of the spread, but some dynamics of the debunking, offering a look at the lifecycle of a coordinated campaign to create a counter-authority figure and drive a political and economic agenda.

Key Takeaways:

-

Content related to Mikovits appeared with increasing frequency within the natural-health and anti-vaccine communities beginning April 15, 2020. Much of it took the form of YouTube videos shared to Facebook. These early videos got some traction in QAnon as well as broadly-conspiratorial communities, but largely stayed within these echo chambers until the "Plandemic" video was posted by its creator on May 4.

-

After the "Plandemic" video appeared, content related to Mikovits began to spread widely outside of the original collection of communities: over 5000 posts mentioning her appeared across 1681 pro-Trump/conservative Groups and Pages, more than 800 posts showed up across 125 “Reopen”-oriented groups, and 875 posts appeared across 494 generic local chat community Facebook Groups. There were additionally over 3700 niche interest groups with posts mentioning Mikovits that did not fit any of our community classifications.

-

Of the 41,662 posts mentioning Mikovits on Facebook and Instagram, the majority had very low engagement; 37,120 (89%) had fewer than 50 engagements, and 11,548 posts (28%) had zero interactions.

-

Some of the highest-engagement posts supportive of Mikovits came from verified “blue-check” influencers, including Robert F. Kennedy Jr, Rashid Buttar, and some Italian and Spanish-language influencers.

-

11 of the top 30 high-engagement posts overall appeared on Instagram, despite only 503 Instagram posts in the data set (1.2%).

-

Our research also looked at the spread of debunking content. The "Plandemic" video went viral between May 5-7; most debunking pieces appeared between May 6-12. Some were created by news organizations (ie, Buzzfeed and the New York Times), some by social-media-savvy doctors (ZDoggMD, Doctor Mike), and some were direct posts on science communicator pages (SciBabe). We found that 10 debunking posts on Facebook and Instagram made it into the top-25 highest-engagement posts mentioning Mikovits overall. However, closer examination of the engagement on some of these posts suggests that many top comments were from people angrily challenging the fact-check.

-

As the debunking content began to appear and spread within the United States, mentions of Mikovits and shares of the "Plandemic" video were gaining momentum in international communities. We noted rising activity in Italian, Portuguese, Romanian, Vietnamese, Norwegian, Dutch, French, and German. This slight time offset may indicate an opportunity to minimize the global reach of misinformation in the future through rapidly-translated debunking articles.

Manufacturing an Influencer

The "Plandemic" video was not the first time Judy Mikovits was cast as a whistleblowing hero. Three weeks before it appeared, there was an increase in social media posts mentioning Mikovits – and her new book – within anti-vaccine communities and Twitter hashtags related to Anthony Fauci. Her social media commentary, and book promotion, focused on two themes: first, pseudoscience about vaccines; second, allegations of government cover-ups and intrigue. Mikovits had claimed for years that a wide range of diseases were caused by contaminated vaccines; that trope was adjusted to fit public interest in the coronavirus pandemic via claims that COVID-19 was an engineered virus, and that it was tied to the flu shot. To appeal to the rising Reopen movement, which sees Fauci as an impediment, Mikovits claimed that he and other powerful forces had silenced her, targeting her as part of a vast government cover-up to conceal her research findings. None of this is true.

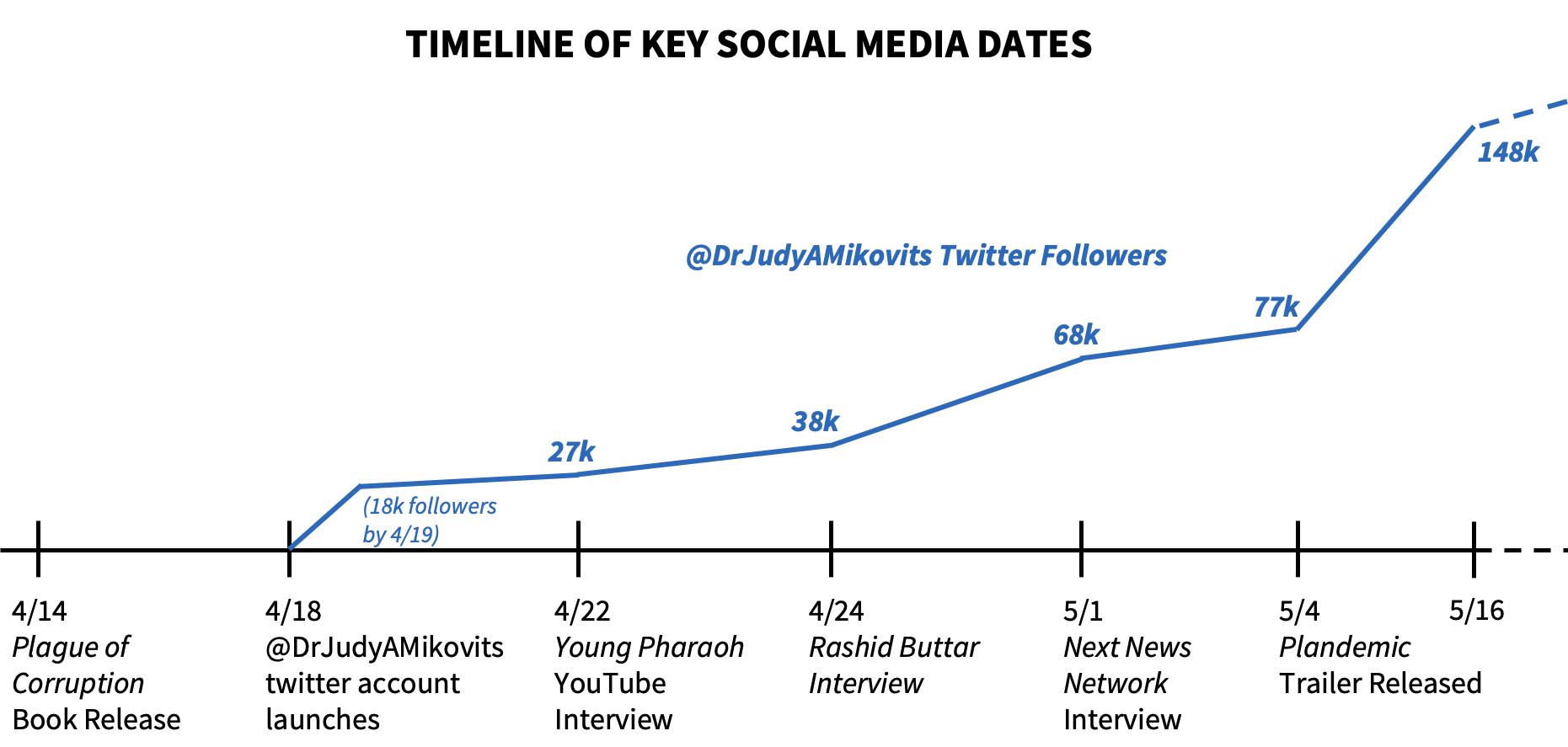

Mikovits (or her team) had previously run a Twitter account for her speaking and writing career on the anti-vaccine conference circuit; it amassed roughly 1700 followers in three years. Following the release of the new book, on April 18th a new Twitter account appeared: @drjudyamikovits. Within 24 hours it tweeted only once yet amassed nearly 18,000 followers. Rapid follower growth continued for days, sometimes by thousands overnight despite no new tweets or prominent mentions. An analysis of the new account’s followers turned up thousands of accounts created within the prior two weeks, as well as follower clusters of older accounts created within close time proximity that had no tweets or profile pictures.

The first tweet from @drjudyamikovits, since deleted, announced that Zach Vorhies was helping with her social media presence. Vorhies, a self-styled whistleblower involved with Project Veritas (a group known for creating misleading ideological attack videos) had posted his own tweets corroborating that he was running Mikovits’s PR. His motivation appeared tied to Fauci: “Help Me Take Down Fauci”, he wrote in a tweet appealing for donations to get Mikovits’s message out and outlined the marketing plan on his GoFundMe page:

The remarkable growth on Twitter, and increasing presence of Mikovits on YouTube channels that had featured Vorhies, line up with this strategy. Journalists have since covered additional facets of Vorhies’s involvement, and University of Washington professor Kate Starbird wrote about the Twitter dynamics of Mikovits and "Plandemic."

YouTube Roadshow

Mikovits was known within anti-vaccine and alternative health communities, but largely unknown to the broader public as her book promotion got underway. YouTube creators with large numbers of subscribers provided a way to put her message in front of large, new audiences. These included alternative medicine influencers like Rashid Buttar, right-wing media such as Next News Network, and generic interview channels such as Valuetainment. Facebook data shows that at least 77% of pre-"Plandemic" mentions of ‘Mikovits’ (9269 posts) included video content, and 43% of the posts had a YouTube link; Buzzsumo YouTube data showed that the top ten most-watched videos in the early days of the media campaign amassed 2.9 million views. Many of these YouTube videos were taken down because they contained health misinformation, although this was done after they achieved high view counts and social reach (for example, Next News Network’s video had at least 765,000 views, and 242,600 engagements across Facebook and Twitter before coming down). Several of the takedowns led to secondary videos – and secondary waves of attention – in which channel creators complained about YouTube censorship.

In the Gephi network graph below we show some of the links about Mikovits that early-adopter communities were sharing early on.

Although the early interviews got some reach, high-engagement posts about Mikovits largely stayed within anti-vaccine and alternative-health echo chambers, with some occasional mentions within MAGA and QAnon communities (largely tied to Gateway Pundit coverage).

Then, "Plandemic" appeared…

We inductively created dictionaries of words associated with particular communities, and used these dictionaries to classify the 16,464 Pages, Groups, and Instagram accounts that created the 41,662 posts mentioning Mikovits into 27 distinct communities. The goal was to better understand sharing dynamics at an aggregate community level, vs the dynamics of individual pages. Data obtained via CrowdTangle.

Plandemic: misinformation goes viral

"Plandemic" was posted to Facebook on the afternoon of May 4th by its creator, Mikki Willis. His post was unique in that it did not highlight the content of the video, but instead emphasized that the video would imminently be censored. Stealing a line from the civil rights movement (and poet June Jordan) – ”We are the ones we've been waiting for” – he implored his readers to make copies of the video and share it.

"Plandemic" was a pivotal step in framing Mikovits as a whistleblower icon; 40% of the 41,662 posts in the data set included a link to plandemicmovie.com or a mention of Plandemic. The video was rife with misinformation, but it propelled awareness of Mikovits to a broad range of new audiences. Unlike prior videos, it offered polished editing and mainstream-friendly presentation of both Mikovits’s health conspiracies and her vast-government-coverup story. Communities mentioning her expanded to include hundreds of Groups and Pages representing local communities (“New Brunswick Community Bulletin Board”), religious communities (“Love Of Jesus Fellowship”), and a small handful of liberal and left groups (“The Struggle for Equality”). The network graph below shows the significant increase in mentions and the emergence of new communities.

The “Miscellaneous” community includes 3,700 Groups and Pages that didn’t fit neatly into any of the other communities - “Fashion Style”, “Martin Guitars,” etc. This difficulty of classification is itself interesting, because it suggests that "Plandemic" captured the attention of a broad range of audiences. The figure below offers another view of mentions and interactions of Mikovits in the few days immediately surrounding "Plandemic." Interestingly, despite the significant spike in some community shares, such as MAGA, there was no significant rise in engagement. Many of the posts had fewer than 20 interactions.

Post-Plandemic: International Growth and Debunking Narratives

On May 7th, 2020, as "Plandemic"’s virality began to diminish among U.S. audiences, we observed two new dynamics. First, a ‘second wave’ rise in posting activity about Mikovits in a variety of non-English languages, including Italian, Polish, Russian, and others (below). While we’d noted the early presence of activity in Spanish-language conspiracy communities (a finding observed by other researchers), the number of non-English posts increased. These posts included links not only to "Plandemic" itself, but to other non-English videos discussing the claims.

This graph shows the emergence of posts in numerous languages mentioning Mikovits after the May 4th release of "Plandemic." The video went viral on May 5-6th, while non-English posts increased in volume several days later. (The early Spanish-language posts were part of a cluster of Spanish-language conspiratorial pages.) Click to view in separate window.

The second dynamic that picked up around May 7th, 2020 was the appearance of mainstream media, medical and scientific influencers, and fact-checking organizations. We tracked 65 links featuring debunking content created between May 6th and May 12th. These links appeared in 1,132 posts in our 41,662-post data set; most (714) were links to articles; 366 were shares of video or YouTube content.

Debunking is inherently reactive, but the two-day gap between viral misinformation and correction illustrates how the lie goes halfway around the world, as the saying goes, before the truth gets its pants on. The earliest debunking content appeared in English as the misinformation was continuing around the world, translated into other languages. The bar chart below shows the number of posts of 65 debunking URLs appearing within various communities (almost all of those posts were in English).

Shares of identified debunking content within communities.

Shares of identified debunking content within communities.

Understanding the complex dynamics around sharing corrections is important to understanding how to address viral misinformation. In this preliminary work, we noted that 6 of these posts ranked among the 25 highest-engagement posts that mentioned ‘Mikovits’ overall. However, engagement stats alone don’t always tell the whole story. For example, some of the engagement comes from the debunker’s own fan base; YouTube influencers who post funny or creative videos get a lot of encouragement from their subscribers, which, while heartening to see, doesn’t mean that their debunking reached fence-sitters or changed minds of those who believed the misinformation.

We looked at some of the posts of debunking links that appeared in more conspiratorial or politically polarized groups; examples appear below. While some posters appeared receptive to correction, others posted the links to debunk the debunking, mock the media’s attempts to ‘silence the truth’, or suggest fellow Group members go downvote the correction videos.

Potential Responses

-

Video platforms can reduce viral spread of new videos via recommendation and search engines while videos are evaluated by fact-checkers. This could be done in an automated fashion on videos that mention high-manipulation-risk topics (like COVID) and would give fact-checkers and policy teams more time to make considered decisions.

-

Video platforms should consider leaving up the original postings of controversial videos, annotated with the appropriate fact-check, and downranking or eliminating re-uploads. This creates one location that can be used to offer debunking content, both as a pre-roll on the video and in the recommended next videos. Eliminating the video completely is almost impossible, and doing so ensures that the original disinformation goes viral while debunking content is not distributed. Takedowns also have the secondary effect of leading to allegations of censorship that draw attention and even elevate the reputation of the creator or subject within some communities.

Conclusion

The campaign to recast Judy Mikovits as a whistleblower offers a case study in the type of factional network dynamics and cross-platform content spread that will likely happen repeatedly over the coming months, around COVID-19 as well as the 2020 election. Although the activity involved some fake Twitter accounts, there was nothing that crossed the line into coordinated inauthentic behavior -- this was a marketing campaign that pulled ordinary people into the sharing process. However, it was also a marketing campaign that made blatantly false claims and increased confusion and skepticism around vaccines, health authorities, and institutional responses to the pandemic. Platforms have rightly committed to mitigating health misinformation; this example makes clear the need to develop better solutions that avoid after-the-fact content takedowns that turn manipulative charlatans into free-expression martyrs. Further study of cross-platform, cross-faction sharing dynamics around debunking content in particular would help inform fact-checking efforts, and help platforms gauge how to respond to highly-misleading viral videos.

Trendline showing mentions of Mikovits on Facebook Pages and public Groups prior to the May 4th release of Plandemic. Most of the peaks coincided with YouTube appearances shared in Facebook posts, such as Rashid Buttar, a doctor known for cancer quackery, discussing Mikovits/Fauci on his channel on April 19th. Image via Crowdtangle. Click to enlarge in new window.

Trendline showing mentions of Mikovits on Facebook Pages and public Groups prior to the May 4th release of Plandemic. Most of the peaks coincided with YouTube appearances shared in Facebook posts, such as Rashid Buttar, a doctor known for cancer quackery, discussing Mikovits/Fauci on his channel on April 19th. Image via Crowdtangle. Click to enlarge in new window.

Figure 2: A close up of links shared by the highest number of different communities in the pre-plandemic period. Interviews of Mikovits during this time period gained the most traction across different communities, led by Rashid Buttar’s four-part coverage which comprised 4 of the 8 leading links in this figure. Key: MAGA/Conservative in red, QAnon in orange, Conspiracy in green, Miscellaneous in purple, Religious/Spiritual in brick.

Figure 2: A close up of links shared by the highest number of different communities in the pre-plandemic period. Interviews of Mikovits during this time period gained the most traction across different communities, led by Rashid Buttar’s four-part coverage which comprised 4 of the 8 leading links in this figure. Key: MAGA/Conservative in red, QAnon in orange, Conspiracy in green, Miscellaneous in purple, Religious/Spiritual in brick.

left to right, pre-plandemic to post plandemic URL shares by the different sub communities in the dataset. The Miscellaneous and the MAGA/Conservative communities increased their traction, while the Italian, Portuguese, News/Media, and Medical/Science communities experienced the most dramatic growth as they emerged on the graph

left to right, pre-plandemic to post plandemic URL shares by the different sub communities in the dataset. The Miscellaneous and the MAGA/Conservative communities increased their traction, while the Italian, Portuguese, News/Media, and Medical/Science communities experienced the most dramatic growth as they emerged on the graph

Number of posts within classified factional communities for the three days around Plandemic’s peak virality, viewed at an hourly scale. This shows with additional granularity Mikovits’ expansion from mentions in conspiratorial echo-chambers to mentions within many different types of communities, as well as the contrast between number of posts and engagement numbers.

Number of posts within classified factional communities for the three days around Plandemic’s peak virality, viewed at an hourly scale. This shows with additional granularity Mikovits’ expansion from mentions in conspiratorial echo-chambers to mentions within many different types of communities, as well as the contrast between number of posts and engagement numbers.

A snapshot of the Debunk content graph, focused in on the interaction of various groups with four links that gained the most inter-group traction.

A snapshot of the Debunk content graph, focused in on the interaction of various groups with four links that gained the most inter-group traction.

A Buzzfeed News Instagram post debunking Plandemic; although high-engagement, the majority of the top comments dispute the debunking and question the media.

A Buzzfeed News Instagram post debunking Plandemic; although high-engagement, the majority of the top comments dispute the debunking and question the media.

Examples of shares of debunking posts dynamics. On the left, the poster is genuinely trying to understand the discrepancy between the video he believed and the fact-check he read. On the right, the poster is asking the community to leave Dislikes on a YouTube fact-check made by a physician creator.

Examples of shares of debunking posts dynamics. On the left, the poster is genuinely trying to understand the discrepancy between the video he believed and the fact-check he read. On the right, the poster is asking the community to leave Dislikes on a YouTube fact-check made by a physician creator.