TikTok just announced the data it’s willing to share. What’s missing?

TikTok just announced the data it’s willing to share. What’s missing?

We watched 100 hours of TikTok videos while waiting for a research API. Will it be worth the wait?

Is the new TikTok API going to be good enough for social media researchers?

In 2022, we analyzed over 100 hours of TikTok videos as part of the Stanford Internet Observatory’s efforts to study election rumors in the runup to the 2022 US midterms. It was a challenging process: we started by searching TikTok using keywords — think “election” or “vote.” But we soon realized a search-based approach has its limits. Keywords simply were not surfacing many videos that shared election rumors even though we found those videos promoted on the For You page (FYP). We are not alone in picking an imperfect method for our research plans — a method that is useful on other social media platforms, but seems to miss quite a lot of what is happening on TikTok. Indeed, a recent review of TikTok scholarship found that few papers use TikTok’s recommender system as the means for data collection; in most cases, researchers used within-reach collection methods without justifying why.

When we went back to the drawing board to improve our data collection, we experienced TikTok features, quirks, and bugs that frustrated our work. This blog post shares our observations in light of TikTok’s announcement this week that the company will roll out a research API. A research API is an important and necessary step forward for research and public understanding of social media. However, our challenges last year provide lessons learned for researchers and the TikTok API development process. We hope that these recommendations are considered in the API design.

The API may not account for significant TikTok features

In our election rumor work, we used both TikTok’s mobile app and web versions. Spending time on each, we observed inconsistencies between the two that affected research results. Now, with the TikTok API available, we have concerns that those will remain unresolved.

We identified consistency issues in the following features salient to the study of election rumors:

- Pinned videos are unavailable on the web: Pinned videos are an important cue for researchers about which videos content creators choose to highlight. While those pinned videos are available on the mobile version, they are not marked as pinned on the web version. TikTok’s API, as proposed, seems unlikely to return whether a video was pinned.

- Photo Mode content is unavailable on the web: A few weeks before Election Day, TikTok announced the release of new editing tools, including Photo Mode, which allows users to post images in a rotating carousel format. However, the content of posts in Photo Mode is unavailable to users on the web application. How will TikTok’s API handle photos?

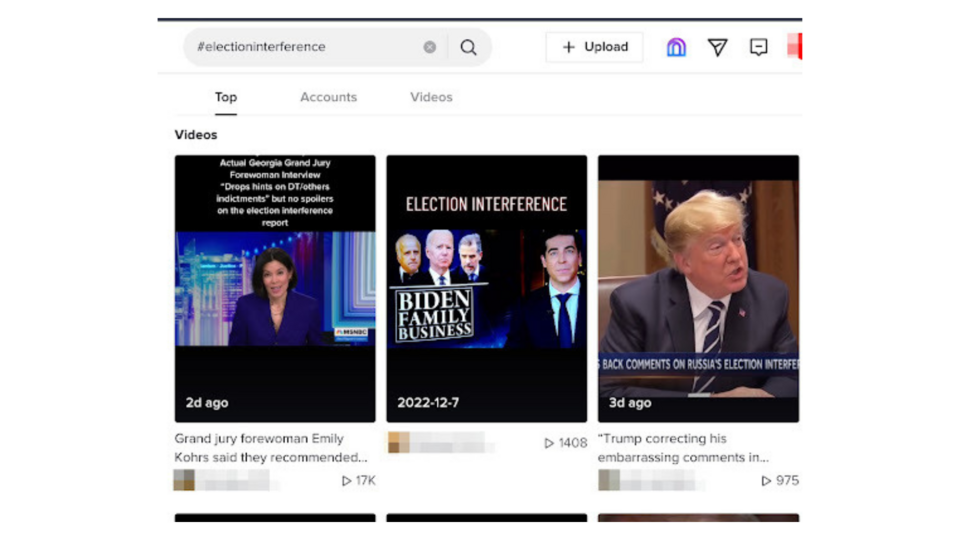

- Keyword search results are inconsistent: Researchers use keywords and hashtags to identify content of interest. But TikTok’s search results display in unexpected ways across web and mobile versions. For example, search results can vary for a banned hashtag depending on where you search on the web. Though “#electioninterference” returns no results when plugged into the URL “https://tiktok.com/tag/”, searching ““#electioninterference” on the general search box will yield videos with “election interference” in the video’s pop-up text.

A search for the hashtag “#electioninterference yields no results on the hashtag search page; using the same #electioninteference on the search page (as of February 23, 2022) will surface videos using the phrase “election interference.”

Relatedly, sometimes web searches would fail where in-app searches would succeed. Occasionally, TikTok removes banned hashtags from video captions while leaving videos up; searching those banned hashtags may still surface those same videos. We speculate these differences in search results are due to different degrees of enforcement from TikTok or errors propagating changes.

These varying search results suggest that TikTok researchers should take caution. While the TikTok API allows for hashtag querying, depending on the design choices of the TikTok API, the data pulled from the API may inaccurately represent users’ actual experience within the website or mobile app.

Overall, these consistency issues complicate the research process. We encourage TikTok to document its various surfaces and features in a format for researchers; a lack of documentation leaves it up to individual researchers to notice and understand their presence and impact.

The API offers no solution for researchers studying TikTok’s recommender system or content moderation.

Limitations on FYP Research: Although our search-based approach had mixed results, we found election rumors could be spotted on TikTok's most distinctive feature: the For You page. We developed a protocol to have research analysts scroll through a FYP curated to surface as much election rumor content as possible. The FYP may have surfaced more relevant content than keyword searches, because, as others have observed, TikTok’s recommender system places a premium on sharing new topics to users.

TikTok’s FYP is opaque to researchers, yet TikTok’s recommendations play a central role in a user’s experience. Researchers will have to get creative to understand TikTok’s recommendations using just the research API. Even as the API promises to improve our understanding of which videos are highly viewed, it is unclear what light it can cast on the distribution of infrequently viewed, but consequential videos.

TikTok has made efforts to explain the high-level factors contributing to the FYP, but social media scholars will need more data access to understand important topics like election rumors or the effect of TikTok on mental health.

Limitations on Content Moderation Research: It is difficult to assess the reach of violative content before it is removed, or to assess whether a user or TikTok removed violating content. Unlike other platforms, search results for removed TikTok videos can look the same whether removed by their creators or removed by the platform. Banned hashtags and hashtags not in use often look the same in search results. Even where TikTok labels can be inconsistent, as certain hashtags aren’t labeled on the web that are labeled on mobile.

Additionally, our colleague Sukrit Venkatagiri at the University of Washington argues that TikTok’s requirement that researchers delete data that’s not available upon refreshing the TikTok Research API makes it harder to document violative content.

When videos are removed, the message to users is “video currently unavailable.” These messages are often the same whether the user deleted the content or TikTok removed the content due to a policy violation. These similar messages obscure user and researcher understanding of the content available on the platform as well as limiting transparency about TikTok’s content moderation.

TikTok is also distinct from other platforms like Twitter and Instagram which provide more specific messages to users explaining why a piece of content is no longer available. These undifferentiated messages impede user and researcher understanding of the content available on the platform as well as limiting transparency about TikTok’s content moderation.

Our Recommendations for TikTok’s API

We recognize it is easy for data access conversations to turn into a laundry list of requests; settling on a set of generally applicable, maintainable data fields is no simple feat. With the recent announcement, we hope TikTok will be responsive to feedback and provide additional information on the research API.

We offer three suggestions rooted in our experience studying election rumors. These recommendations are not exhaustive, but intend to start a conversation on the data that researchers would find most useful and that would further the public interest.

Recommendation 1: Provide Access to Audio Transcripts

Audio plays an important role in TikTok’s information ecosystem. The preliminary research API documentation indicates Music IDs will be searchable; this opens avenues for community detection, as content creators often encourage users to find related videos by clicking through to a video’s related TikTok audio page.

However, we are unclear whether TikTok’s API will allow researchers to search the platform by transcribed audio. By making audio transcripts and other TikTok audio details searchable and downloadable fields, TikTok would improve research access. Without those transcripts, researchers face the daunting task of transcribing audio themselves, which can be computationally intensive and time consuming. If transcripts are available, allowing researchers to download them will shift resource constraints off researchers.

Recommendation 2: Provide Access to Spread Metrics

As we aim to understand how rumors spread on TikTok, metrics that describe how viewers discovered a piece of content (FYP, hashtag page, user’s page, search, etc.) would be additionally instructive to help us understand the flow of content, especially without a deeper understanding of the algorithmic decisions that TikTok implements to recommend videos on the For You page. It was recently reported that TikTok employees have access to a “heating feature” that allows the company to manually push selected videos into the For You feed for users. This recent revelation exemplifies the lack of transparency that researchers and the public currently have about how content is recommended to users. A discoverability metric, like the ones available to creators, would allow researchers to understand the ways in which content reaches users and the resulting patterns in the flow of information.

Recommendation 3: Provide a Solution for Qualified Researchers Seeking Historical Violative Content

Though we observed significant evidence of content moderation on TikTok, we found that the patchwork of enforcement complicates our ability to assess the reach and content of election rumors. TikTok announced it will share a moderation system API that will allow researchers to “evaluate [their] content moderation systems and examine existing content available on [the] platform.” For this to be effective, as other researchers have proposed, this API might include an archive of content that was removed for violating policies, with clear documentation. If TikTok has a special policy category (e.g., elections, health, etc.) for such a scenario, the content could be preserved and documented for researchers to assess the events, along with the effectiveness of moderation on the platform. TikTok will face legal hurdles and reasonable concerns implementing a solution, but further effort on this front by TikTok could aid public interest studies.

The TikTok API roll out has left us with a number of currently unresolved questions. These include:

- Comments returned by the API purportedly remove personal information. How will this system work, what personal information might it miss, and what errors might it introduce?

- The Research API terms of service state that TikTok will set the API quota at its discretion. But researchers likely will be in better position to assess how much data they need for their research questions. More clarity on this point from TikTok would be appreciated.

- How will the research API handle advertisement data and sponsored content?

How TikTok shares its data will dramatically shape how researchers study TikTok. Now that TikTok will make search at scale easier while keeping other forms of data access hard, researchers are at a greater risk of defaulting to the same methods that initially gave us — and may give them — a flawed picture of what’s happening on TikTok.

Thanks to Elena Cryst, Renée DiResta, John Perrino, Mike Caulfield, Rachel Moran, Ilari Papa, and Kate Starbird for their helpful feedback on this piece.